EdTech platforms that are powered by LLMs engage students with their studies through a completely new, conversational interface. Nebuly is at the forefront, helping numerous companies in harnessing these LLMs, revealing user insights that increase student engagement.

The companies that specialize in providing conversational AI for students often face a number of critical issues:

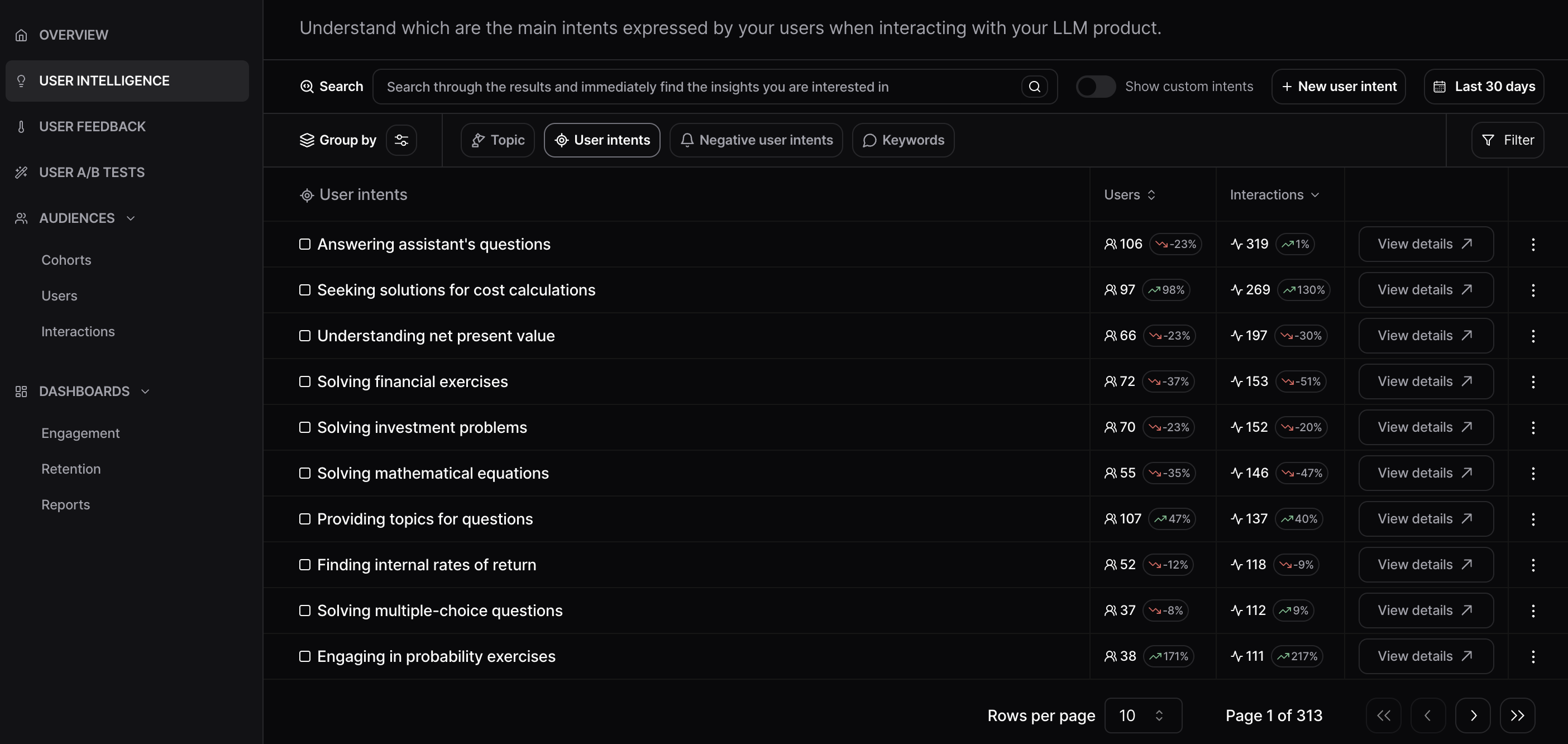

1. Understanding student performance.It is essential for these platforms to determine which subjects students are mastering and where they are struggling. This understanding is critical for tailoring instructional content and support.

2. Content security.Ensuring that discussions within the learning platform remain appropriate is critical to maintaining a safe learning environment. Manually monitoring discussions for harmful user content is not only inefficient but also risky. Inadequate oversight could allow inappropriate or dangerous user content to slip through, potentially exposing students to harm and the company to severe legal liabilities. Furthermore, such oversights could result in breaches of compliance with educational standards, leading to loss of trust and potentially causing schools to rescind contracts.

3. Personalized education.To improve learning outcomes, educators need detailed insight into each student's performance. Without the capability to personalize instruction based on detailed, data-driven insights into each student’s performance, educational content may fail to meet diverse learning needs. This lack of personalization could diminish the educational impact, reducing student engagement and satisfaction, which are crucial for the retention of contracts with educational institutions. Before integrating tools like Nebuly, EdTech companies typically struggled with the manual work involved in analyzing each student-LLM interaction. The sheer volume of data generated made it nearly impossible to understand students behaviour and effectively personalize instructions.

Before integrating tools like

Nebuly, EdTech companies typically struggled with the manual work involved in analyzing each student-LLM interaction. The sheer volume of data generated made it nearly impossible to understand students behaviour and effectively personalize instructions.